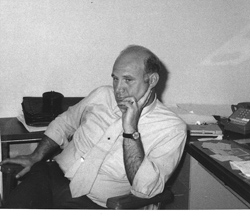

Pat Pistilli is this years Kaufman Award winner. I was out of the country for the award dinner so I didn’t attend but I talked to Pat earlier today.

Pat Pistilli is this years Kaufman Award winner. I was out of the country for the award dinner so I didn’t attend but I talked to Pat earlier today.

Pat, who was at Bell Labs, started DAC (then called SHARE, the Society to Avoid Redundant Effort) 1964 along with a co-conspirator from IBM. The first conference was in Atlantic City in 1964. This eventually became DAC. When the availability of commercial EDA tools made DAC too big to manage as an all-volunteer organization as it had been, Pat left the technical side of design automation to form MP Associates along with his wife Marie. I think that the history of DAC has been well-covered elsewhere so instead, I asked Pat, what was “design automation” back when he started in the business. After all, transistors were fairly new, printed circuit boards hadn’t been invented, integrated circuits were in research and so on.

He told me about the design system he worked on, known as BLADES (for Bell LAbs DEsign System). It ran on an IBM704 with 32K of memory. Think about how little that is: 32 gigabytes (too big for a notebook but not for a high-end server) is a million times as much. The computer had 32 tape-drives (disks were another thing that hadn’t yet been invented). They built the design system to work on a specific project, the Safeguard anti-missile system for the DoD. It was an electronic system so large it occupied 3 buildings.

The system was built like this. At the lowest level were modules which contained 3 or sometimes as many as, dramatic pause, 4 transistors with wire-wrap terminals (if you are too young to know what wire-wrap is, then more than you want to know is here). Boards 33″ by 24″ were covered in these modules with gaps in between to run the wires (because if you ran wires over the tops of the modules you’d never be able to open them again for maintenance). Originally there were 8 different kinds of modules but eventually they ended up with about 30 (that sounds familiar in libraries today). Initially these modules were hard-wired into the code but Pat came up with the idea of putting all the components into a file on a magnetic tape and extracting them from there (the first cell-library I guess). The design rules, for example no wire could be longer than 12″, were on another tape.

These boards were stacked into refrigerator-sized units called frames with more wire-wrap to construct what today we’d call a back-plane. Then lots of these units would then be connected together with manually labeled wires until you’d filled 3 buildings.

Before Pat’s design automation it took 4-5 months to design one of these boards and then another month for the board to be wire-wrapped by hand. Afterward, using the design system, the time was cut to around a month but it still took another month for the hand wire-wrapping.

Then Gardner Denver developed an automatic wire-wrap machine. Pat designed a controller for this (complicated by the need for ‘dressing fingers’ since they couldn’t route point to point and had to avoid wires going over the modules). Now the design automation system could (effectively) directly manufacture the board. That got the time down from a month to a day or two.

This is one example of how manufacturing used to be much more connected to engineering, and delivering a system would often involve people needing to work in all sorts of areas. Software engineers today don’t have to change the design of the semiconductor manufacturing equipment!

The wires that connected the frames were manually labeled. But hand-written labels aren’t always legible, and the glue wasn’t good so they would regularly fall off leading to obvious problems. Pat decided they needed a new way of labeling where the labels could be printed automatically and would stick on the cables properly and never fall off. The only material he could find that seemed like a good starting point was the plastic sheet that 3M used to make band-aids. So he got band-aid material from 3M and would attach it to paper and print the labels using a standard line-printer. But the adhesive still wasn’t good enough so he got the chemical department at Bell Labs to invent a new super-strong adhesive and, further, to develop a coating for the plastic that would accept the ink (so it could be printed) but not dirt so the labels would stay clean and legible. They still needed 3M to supply the original plastic material and to do the die-cutting afterward. Finally, Pat modified a manual wire-wrap gun with a new chuck to create a tool that attached the labels to the wires, inspired by having seen cigarettes being made on a factory tour. These were the first ever machine-prepared labels.

3M actually branched off that part of the business to form a label-making subsidiary called Avery. Yes, the same Avery as makes labels for your inkjet today.

So what happened when three buildings worth of electronics was shipped out to an island in the Pacific? Safeguard was constructed because the US figured that they couldn’t build enough interceptors to destroy every incoming missile. But they also figured out that the USSR couldn’t afford to build that many warheads so that they would use decoys too. Safeguard analyzed the incoming missile trajectories looking for tiny differences in flight path to decide which were real and which were decoys. Pat was invited out to the Pacific for the first test and it was a complete success. The system picked out the one real missile from the five decoys and knocked it down.

Interestingly, in the early days there were big problems getting acceptance of this new technology. Designers didn’t want to use the design system since they worried it would obstruct their creativity. And the manufacturing people were worried that the automation would lose them their jobs. When Pat moved to Denver in 1969 it was the first time AT&T had both design and manufacturing under the same roof. When he arrived the manufacturing manager told him “I hate computers.” Back then the design methodology was that Bell Labs would design the system and build prototypes, then the design would be transferred to Western Electric (the manufacturing arm) who would completely re-lay it out. With the design system this became unnecessary, the prototype could be transferred direct into manufacturing and this became a model for the rest of Western Electric. The system produced cost savings of $2M per year immediately. Since AT&T was a regulated utility, the only way for them to increase profitability was to reduce their costs since they didn’t really have any way to increase their prices. So this was a big deal and the manufacturing manager changed his tune.

Since DAC is 50 years old in a couple of years, I suggested that it would be interesting to have some other people talk about what design automation was in the 5 decades since it started. Now we are in the age of billion transistor chips it is hard to remember the time when 10,000 gates was a huge design, let alone all the automation necessary to create even earlier systems.

The opening keynote of the 3D conference that I went to was by Subramanian Iyer of IBM. He described work they were doing on fully 3D chips for servers. The approaches I’ve already talked about don’t really work for the highest performance end of the spectrum.

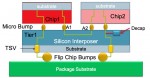

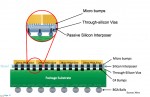

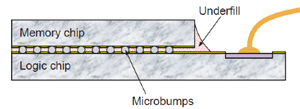

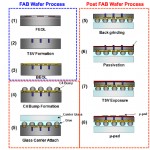

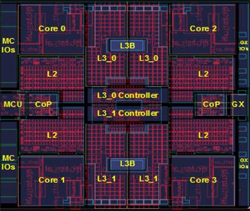

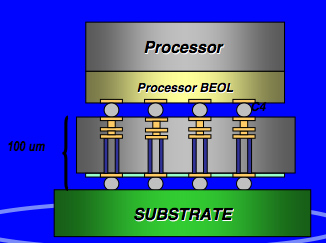

The opening keynote of the 3D conference that I went to was by Subramanian Iyer of IBM. He described work they were doing on fully 3D chips for servers. The approaches I’ve already talked about don’t really work for the highest performance end of the spectrum. The picture to the right shows the basic architecture. On the top is the heatsinked processor die. There are no TSVs through the processor die. It is microbumped to attach to the memory die beneath it and to TSVs that go all the way through to carry the 200A of current that it requires direct from the package substrate. The processor/memory microbumps are at at a 50um pitch. The memory die to package bumps are 186um. The memory die has to be thinned in order to get the TSVs all the way through.

The picture to the right shows the basic architecture. On the top is the heatsinked processor die. There are no TSVs through the processor die. It is microbumped to attach to the memory die beneath it and to TSVs that go all the way through to carry the 200A of current that it requires direct from the package substrate. The processor/memory microbumps are at at a 50um pitch. The memory die to package bumps are 186um. The memory die has to be thinned in order to get the TSVs all the way through. In addition, the memory die is choc-a-bloc with decoupling capacitors to reduce transient voltage droops. This allows for increased processor performance without having to give up area on the processor chip for the capacitors since they are in the metal on the memory die. At the keynote, there was a video showing the dramatic difference to the voltage across the chip with and without this approach to supplying power.

In addition, the memory die is choc-a-bloc with decoupling capacitors to reduce transient voltage droops. This allows for increased processor performance without having to give up area on the processor chip for the capacitors since they are in the metal on the memory die. At the keynote, there was a video showing the dramatic difference to the voltage across the chip with and without this approach to supplying power.